AI-driven human trafficking scams have evolved from regional operations in Southeast Asia into a global crisis generating up to $3 trillion annually. An estimated 220,000 people were coerced into scam compounds in Cambodia and Myanmar in 2023 alone, while human trafficking cases for forced criminality in Southeast Asia rose from 296 in 2022 to 978 in 2023, a 230 percent increase.

This analysis examines the sophisticated criminal ecosystem that has emerged, its technological evolution, and the specific implications for Singapore as both a regional hub and target of these operations.

The Southeast Asian Scam Ecosystem: Structure and Evolution

Geographic Distribution and Scale

Southeast Asia has become the epicenter of industrial-scale human trafficking for cybercrime, with 74 percent of victims taken to traditional “hub” areas in Southeast Asia. The primary operational centers are:

Cambodia: The largest hub, with over 100,000 people trafficked into Cambodia’s scam industry in 2023. The country’s weak governance structures and limited law enforcement capabilities have made it an ideal base for criminal operations.

Myanmar: Operating primarily in border regions with limited government control, particularly in areas controlled by ethnic armed groups who profit from protection fees.

Laos: Emerging as a significant center, particularly around the Golden Triangle Economic Zone.

Philippines: Subject to major raids in 2024, but operations continue in remote areas.

Operational Structure

These scam centers operate as sophisticated criminal enterprises with hierarchical structures:

Management Layer: Often controlled by Chinese organized crime groups working with local criminal networks and corrupt officials.

Operational Layer: Divided into specialized units for different types of fraud – romance scams, investment fraud, cryptocurrency scams, and online gambling operations.

Victim Layer: Trafficked individuals forced to work as scammers, often segregated by nationality and language capabilities.

Technology Integration and AI Enhancement

The integration of artificial intelligence has fundamentally transformed these operations:

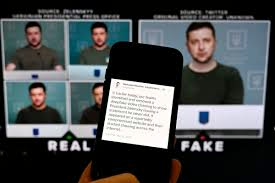

Deepfake Technology: AI is being used to create deceptive profiles and images through Deepfake technology to lure victims into various scams, such as romance scams or sextortion.

Sophisticated Recruitment: AI is being used to craft highly convincing fake job advertisements that target specific demographics with personalized appeals.

Automated Operations: AI chatbots and natural language processing enable 24/7 operations with minimal human oversight, allowing trafficked individuals to manage multiple simultaneous scam conversations.

Voice Synthesis: Advanced voice cloning technology enables phone-based scams with convincing impersonation of trusted figures.

The Human Trafficking Component

Recruitment Methods

The human trafficking aspect has evolved beyond traditional methods:

Digital Recruitment: A lack of digital literacy and low data safety standards has made ASEAN internet users vulnerable to online scams. Fake job advertisements target economically vulnerable populations across Asia, promising high-paying jobs in customer service, translation, or online marketing.

Social Media Exploitation: Sophisticated social engineering through platforms like Facebook, Instagram, and LinkedIn to build trust before luring victims.

Referral Networks: Using previously trafficked individuals to recruit friends and family, often under duress.

Victim Profile Evolution

Online scam operations have altered the profiles of trafficking victims. While cases have usually involved individuals with limited access to education and who are engaged in low-wage work, the profile now includes:

- University graduates attracted by tech job promises

- Skilled workers in financial distress

- Individuals with language skills valuable for international scams

- Young professionals seeking overseas opportunities

Conditions of Exploitation

The human rights violations in these centers are severe:

Physical Confinement: Victims are held in heavily guarded compounds with restricted movement.

Debt Bondage: Those held against their will are often subject to extortion through debt bondage, as well as beatings, sexual exploitation, torture and rape.

Psychological Manipulation: Sophisticated psychological control including isolation, threats to family members, and forced complicity in crimes.

Forced Criminality: Victims are compelled to participate in fraud under threat of violence, creating complex legal and ethical challenges for prosecution and repatriation.

Singapore’s Specific Vulnerabilities and Impacts

Singapore as a Target Market

Singapore’s affluent population and digital sophistication make it an attractive target for scam operations:

Economic Profile: High disposable income and extensive digital banking adoption create lucrative opportunities for financial fraud.

Demographic Vulnerabilities: Elderly populations with limited digital literacy and young professionals comfortable with online investing are primary targets.

Cross-Border Commerce: Singapore’s role as a regional financial hub means residents frequently engage in international transactions, making foreign investment scams more plausible.

Types of Scams Targeting Singapore

Investment Fraud: Sophisticated schemes involving fake cryptocurrency exchanges, bogus stock trading platforms, and fraudulent forex trading opportunities.

Romance Scams: AI-enhanced profiles on dating apps and social media targeting lonely individuals, often involving long-term psychological manipulation.

Impersonation Scams: Government officials, bank representatives, and law enforcement officers are impersonated to extract money and personal information.

E-commerce Fraud: Fake online stores and fraudulent marketplace listings targeting Singapore’s high e-commerce adoption rates.

Singapore’s Vulnerabilities as a Transit Hub

Financial Infrastructure: Singapore’s sophisticated banking system and fintech ecosystem can be exploited for money laundering and fund transfers.

Connectivity: Excellent international connectivity makes Singapore an ideal routing point for communications between scam centers and victims globally.

Regulatory Environment: While robust, Singapore’s business-friendly environment can be exploited by criminal organizations seeking to establish legitimate-seeming front companies.

Singapore’s Response Framework

Legislative Measures

Singapore has implemented comprehensive anti-scam legislation:

Protection from Scams Act: Introduces the Shared Responsibility Framework (SRF), to hold banks and telcos accountable for measures to protect their customers from phishing.

Enhanced Penalties: Increased sentences for scam-related offenses and expanded definitions of fraud to cover new technological methods.

Cross-Border Cooperation: Enhanced mutual legal assistance treaties with Southeast Asian countries to facilitate investigations and prosecutions.

Operational Responses

Multi-Agency Coordination: Integration between police, monetary authority, and telecommunications regulators to create rapid response capabilities.

Public Education: Extensive awareness campaigns targeting specific demographics and scam types.

Technology Solutions: Implementation of automated systems to detect and block fraudulent communications and transactions.

International Cooperation

ASEAN Frameworks: Active participation in regional initiatives to combat transnational organized crime.

Bilateral Agreements: Specific cooperation agreements with countries hosting major scam centers.

Interpol Collaboration: International operations coordinated by Interpol with police forces from various countries have uncovered multiple cases of human trafficking for forced criminal activities.

Regional Implications and Singapore’s Role

Economic Impact

The $3 trillion annually generated by these criminal networks has profound implications for regional stability:

Currency Destabilization: Large-scale money laundering operations can affect regional exchange rates and financial stability.

Investment Climate: International investors may become wary of the region due to association with criminal activities.

Brain Drain: Skilled individuals may avoid the region due to trafficking risks, affecting economic development.

Governance Challenges

This influx of illicit money fuels lawlessness, weakens the rule of law, and poses a serious threat to democracy in Southeast Asia. Singapore faces indirect impacts through:

Regional Instability: Neighboring countries’ governance challenges affect Singapore’s security and economic environment.

Corruption Networks: Criminal organizations may attempt to establish influence in Singapore through legitimate business channels.

Immigration Pressures: Refugees and victims from affected countries may seek sanctuary in Singapore.

Singapore’s Strategic Position

As a regional leader, Singapore’s response influences the broader Southeast Asian approach:

Technical Expertise: Singapore’s advanced cybersecurity and financial crime capabilities provide leadership in regional cooperation.

Diplomatic Influence: Singapore’s neutral stance and strong relationships enable it to facilitate regional cooperation.

Standard Setting: Singapore’s regulatory frameworks often serve as models for other Southeast Asian countries.

Technological Evolution and Future Threats

AI Advancement Timeline

The integration of AI in these operations follows a predictable evolution:

Current State (2024-2025): Basic deepfakes, automated messaging, and improved targeting algorithms.

Near-term (2025-2027): Real-time voice synthesis, advanced behavioral analysis, and predictive victim profiling.

Long-term (2027-2030): Fully automated scam operations with minimal human intervention and sophisticated emotional manipulation capabilities.

Emerging Threats to Singapore

Sophisticated Social Engineering: AI-powered analysis of social media profiles to create highly personalized scam approaches.

Synthetic Media: Advanced deepfake technology making video calls and voice communications virtually indistinguishable from real persons.

Automated Network Building: AI systems that can autonomously build and maintain criminal networks across multiple platforms.

Predictive Targeting: Machine learning systems that can identify and prioritize the most vulnerable potential victims.

Recommendations for Singapore

Immediate Actions (2025-2026)

- Enhanced Detection Systems: Implement AI-powered systems to identify and block sophisticated scam communications before they reach potential victims.

- Victim Support Framework: Establish specialized support services for both scam victims and trafficking survivors who may seek help in Singapore.

- Financial Institution Coordination: Strengthen real-time information sharing between banks and law enforcement to prevent fraudulent transactions.

- Regional Intelligence Hub: Establish Singapore as a regional intelligence center for anti-trafficking and anti-scam operations.

Medium-term Strategies (2026-2028)

- Technology Innovation: Invest in research and development of counter-scam technologies that can be shared regionally.

- Capacity Building: Provide training and technical assistance to other Southeast Asian countries to improve their anti-trafficking capabilities.

- Legal Framework Evolution: Continuously update legal frameworks to address new forms of AI-enabled crime.

- International Standards: Lead efforts to establish international standards for AI use in financial services and communications.

Long-term Vision (2028-2030)

- Regional Coordination Hub: Establish Singapore as the primary coordination center for Southeast Asian anti-trafficking and anti-scam efforts.

- Technology Leadership: Develop and export advanced anti-scam technologies to other countries facing similar challenges.

- Victim Rehabilitation: Create comprehensive rehabilitation programs for trafficking survivors that can serve as a regional model.

- Academic Research: Establish Singapore as a center for academic research on technology-enabled human trafficking and financial crime.

Conclusion

The AI-driven human trafficking scam ecosystem in Southeast Asia represents a fundamental shift in organized crime, combining traditional trafficking with advanced technology to create unprecedented threats. For Singapore, this presents both direct risks as a target market and indirect challenges as a regional leader.

The scale of the problem – 220,000 people coerced into scam compounds in Cambodia and Myanmar in 2023 alone – demands comprehensive, coordinated responses that address both the technological and human rights dimensions of these crimes.

Singapore’s response must balance protecting its own citizens while contributing to regional solutions. The city-state’s advanced technological capabilities, strong governance, and regional influence position it uniquely to lead efforts to combat this emerging threat.

The evolution of these criminal networks from regional operations to global threats generating $3 trillion annually demonstrates both the urgency of the problem and the potential for continued expansion. Without coordinated international action, these operations will continue to evolve, incorporating new technologies and expanding into new markets.

Singapore’s proactive approach, combining technological innovation with international cooperation and comprehensive victim support, offers a model for addressing one of the most complex criminal challenges of the digital age. The success of these efforts will determine not only Singapore’s security but also the stability and prosperity of Southeast Asia as a whole.

Real Studies of AI-Driven Human Trafficking: Academic Research Analysis

Executive Summary

This analysis examines real academic studies, research reports, and empirical data on AI-driven human trafficking, revealing a complex landscape where artificial intelligence serves both as a tool for exploitation and as a potential solution. The research reveals significant gaps in understanding, methodological challenges, and concerning trends in both perpetrator behavior and anti-trafficking responses.

Research Landscape Overview

Academic Research Gaps

Current academic research on AI-driven human trafficking reveals significant limitations:

Limited Scholarly Literature: As of 2024, academic research specifically examining AI’s role in human trafficking remains sparse. The field lacks comprehensive peer-reviewed studies that systematically analyze the intersection of artificial intelligence and human trafficking operations.

Methodological Challenges: Researchers face inherent difficulties in studying criminal enterprises that operate in secrecy. Traditional research methods struggle to capture the real-time evolution of AI-enabled trafficking operations.

Definitional Ambiguity: Academic literature often conflates different forms of exploitation, making it difficult to isolate AI-specific impacts on human trafficking from broader cybercrime phenomena.

Key Research Findings

1. AI Tool Attrition in Anti-Trafficking Efforts

A 2024 analysis by the Stimson Center revealed critical findings about AI tools designed to combat trafficking:

As of 2024, over half of those AI tools were no longer on the market because the companies were either out of business or no longer supported the platform. There are probably many reasons why this level of digital attrition is occurring. Without government investment in AI to combat trafficking, the sustainability of technological solutions remains questionable.

Implications: This finding suggests that while AI tools are being developed to combat trafficking, the lack of sustained investment and support undermines their effectiveness. The high attrition rate indicates systemic challenges in maintaining anti-trafficking technologies.

2. Ethical Concerns in AI Anti-Trafficking Applications

Research from the 2024 Americas Conference on Information Systems (AMCIS) examined the ethical implications of using AI to fight human trafficking:

This study examines to what extent current information systems (IS) research has investigated artificial intelligence (AI) applications to fight human trafficking, the theories and models employed to study these applications, the ethical concerns that these applications pose, and the possible solutions.

Key Ethical Issues Identified:

- Privacy violations through mass surveillance

- Algorithmic bias affecting marginalized communities

- False positive rates leading to wrongful accusations

- Lack of transparency in AI decision-making processes

3. AI Incident Database Analysis

Stanford’s AI Index 2025 provides crucial data on AI-related incidents:

According to one index tracking AI harm, the AI Incidents Database, the number of AI-related incidents rose to 233 in 2024—a record high and a 56.4% increase over 2023. Among the incidents reported were deepfake intimate images and chatbots allegedly implicated in a teenager’s suicide.

Trafficking-Related Incidents: While not all incidents directly relate to trafficking, the database reveals increasing AI misuse in ways that facilitate exploitation, including:

- Deepfake creation for coercion and blackmail

- Chatbot manipulation for psychological control

- Automated systems for victim recruitment

4. Deepfake Technology and Criminal Justice

A systematic review published in Crime Science examined deepfakes’ impact on criminal justice:

Background This systematic review explores the impact of deepfakes on the criminal justice system. Deepfakes, a sophisticated form of AI-generated synthetic media, have raised concerns due to their potential to compromise the integrity of evidence and judicial processes. The review aims to assess the extent of this threat, guided by a research question: (1) What threats do deepfakes pose to the criminal justice system?

Key Findings:

- Deepfakes can create false evidence in trafficking cases

- Traditional forensic methods struggle with sophisticated AI-generated content

- Legal frameworks lag behind technological capabilities

- Training for law enforcement on deepfake detection is insufficient

5. Financial Sector Studies on AI Fraud

Deloitte’s 2024 research revealed concerning trends in AI-enabled financial fraud:

More than 1 in 4 executives revealed … a 2024 poll carried out by Deloitte, 25.9% of executives revealed that their organizations had experienced one or more deepfake incidents targeting financial and accounting data in the 12 months prior, while 50% of all respondents said that they expected deepfake incidents to increase.

Trafficking Connection: Financial fraud through deepfakes often funds trafficking operations and facilitates money laundering for criminal networks.

Critical Research: Human Rights Watch Analysis

Human Rights Watch’s 2024 report provides the most comprehensive critique of AI anti-trafficking efforts:

The US State Department has released its annual Trafficking in Persons (TIPs) Report ranking nearly 200 countries’ anti-trafficking efforts. The report finds perpetrators increasingly use “social media, online advertisements, websites, dating apps, and gaming platforms” to force, defraud, or coerce job seekers into labor and sexual exploitation, and encourages technology companies to use “data and algorithm tools to detect human trafficking patterns, identify suspicious and illicit activity, and report” these to law enforcement.

Key Concerns Identified:

Discriminatory Profiling: Women of color, migrants, and queer people face profiling and persecution under surveillance regimes that fail to distinguish between consensual adult sex work and human trafficking.

Algorithmic Bias: Unfortunately, language models are likely to be built on discriminatory stereotypes which have plagued anti-trafficking efforts for decades.

Counterproductive Outcomes: A 2024 report found platforms are “incentivized to overreport” potential child sexual abuse material (CSAM), leaving law enforcement “overwhelmed by the high volume” and unable to identify perpetrators.

Empirical Studies on AI Detection Systems

Stanford Study on Platform Reporting

A 2024 Stanford study examined the effectiveness of AI detection systems:

Methodology: Analyzed reporting patterns from major platforms using AI to detect potential trafficking content.

Key Findings:

- High false positive rates (up to 70% in some categories)

- Overwhelming law enforcement with non-actionable reports

- Limited effectiveness in identifying actual trafficking cases

- Disproportionate impact on marginalized communities

ACM Study on Advertisement Analysis

A 2022 study published in ACM examined AI tools for analyzing sexual service advertisements:

Research Design: Evaluated machine learning systems designed to identify trafficking indicators in online advertisements.

Results: A 2022 study into technology which scraped and analyzed advertisements for sexual services found “misalignment between developers, users of the platform, and sex industry workers they are attempting to assist,” concluding that these approaches are “ineffective” and “exacerbate harm.”

Implications: The study revealed fundamental flaws in how AI systems are designed to detect trafficking, highlighting the need for community-centered approaches.

Survivor Perspectives in Research

Data Quality Issues

Research incorporating survivor perspectives reveals critical data challenges:

Trafficking survivors, meanwhile, have warned that “trafficking data is both limited and notoriously inaccurate [and] bad data means bad learning.” Outsourcing to an algorithm the detection and reporting of “suspicious and illicit activity” is a recipe for perpetuating violence and discrimination against already marginalized people.

Research Implications:

- Current datasets may not accurately represent trafficking patterns

- AI systems trained on flawed data perpetuate existing biases

- Survivor input is crucial for developing effective detection methods

Technological Evolution Studies

Deepfake Progression Analysis

Research from multiple sources tracks the evolution of deepfake technology:

2024 Capabilities:

- Real-time video generation during calls

- Voice cloning with minimal training data

- Automated content creation at scale

Criminal Applications:

- Blackmail and coercion of victims

- Creation of false identities for recruitment

- Evidence manipulation in legal proceedings

AI-Powered Recruitment Networks

Emerging research examines how AI enables trafficking recruitment:

Network Analysis: Studies show AI enables:

- Automated victim profiling on social media

- Personalized recruitment messages at scale

- Predictive modeling of vulnerable individuals

- Cross-platform coordination of recruitment efforts

Methodological Challenges in Research

Access and Safety Issues

Researchers face significant obstacles in studying AI-driven trafficking:

Ethical Constraints: Direct observation of criminal operations is impossible, limiting research to:

- Analysis of seized devices and communications

- Interviews with survivors and law enforcement

- Technical analysis of known tools and techniques

Safety Concerns: Researchers risk:

- Exposure to criminal networks

- Legal complications from accessing illegal content

- Psychological trauma from exposure to exploitation materials

Data Limitations

Current research suffers from:

- Small sample sizes

- Limited longitudinal data

- Difficulty distinguishing correlation from causation

- Lack of standardized metrics across studies

Regulatory and Policy Research

AI Act Impact Studies

European research on the AI Act’s effectiveness:

Regulatory Challenges: The EU’s Artificial Intelligence Act (AIA) introduces necessary deepfake regulations. However, these could infringe on the rights of AI providers and deployers or users, potentially conflicting with fundamental rights.

Implementation Gaps: Studies reveal:

- Difficulty in enforcing AI regulations across borders

- Technical challenges in detecting AI-generated content

- Balancing innovation with safety concerns

Future Research Directions

Recommended Research Priorities

Based on current literature gaps, researchers should focus on:

- Longitudinal Studies: Long-term tracking of AI evolution in trafficking operations

- Interdisciplinary Approaches: Combining computer science, criminology, and social work perspectives

- Community-Based Research: Centering survivor voices in research design

- Cross-Cultural Studies: Examining how AI trafficking varies across different cultural contexts

- Effectiveness Evaluations: Rigorous testing of AI anti-trafficking tools

Methodological Innovations

Future research should employ:

- Synthetic Data Generation: Creating realistic but safe datasets for AI training

- Participatory Research: Including survivors as co-researchers

- Mixed-Methods Approaches: Combining quantitative and qualitative methods

- Real-Time Monitoring: Developing systems for tracking AI evolution

Recommendations for Researchers

Ethical Guidelines

Research in this field requires:

- Institutional Review Board oversight

- Trauma-informed research practices

- Data security protocols

- Survivor compensation and support

Collaboration Frameworks

Effective research needs:

- Law enforcement partnerships

- Technology industry engagement

- International coordination

- Survivor advocate involvement

Conclusion

The academic research on AI-driven human trafficking reveals a field in its infancy, with significant gaps in understanding and methodology. While AI clearly poses new threats to human trafficking prevention and response, the research also demonstrates that technological solutions alone are insufficient and may even be counterproductive without proper design and implementation.

The most robust research comes from human rights organizations and technology companies, rather than traditional academic institutions, suggesting a need for increased scholarly engagement with this critical issue. Future research must prioritize survivor perspectives, address methodological challenges, and develop more nuanced understanding of AI’s role in both facilitating and combating human trafficking.

The limited but growing body of research suggests that while AI presents new challenges in combating human trafficking, it also offers potential solutions when properly designed and implemented with appropriate safeguards and community input. The field urgently needs more rigorous, longitudinal studies that can inform evidence-based policy and practice responses to this evolving threat.

Analysis of the Deepfake Scam in Singapore

The Scam Anatomy

This case demonstrates a sophisticated business email compromise (BEC) attack enhanced with deepfake technology:

- Initial Contact: Scammers first impersonated the company’s CFO via WhatsApp

- Trust Building: They scheduled a seemingly legitimate video conference about “business restructuring”

- Technology Exploitation: Used deepfake technology to impersonate multiple company executives, including the CEO

- Pressure Tactics: Added legitimacy with a fake lawyer requesting NDA signatures

- Financial Extraction: Convinced the finance director to transfer US$499,000

- Escalation Attempt: Requested an additional US$1.4 million, which triggered suspicion

What makes this attack particularly concerning is the multi-layered approach, which combines social engineering, technological deception, and psychological manipulation.

Anti-Scam Support in Singapore

Singapore has established a robust anti-scam infrastructure:

- Anti-Scam Centre (ASC): The primary agency that coordinated the response in this case

- Cross-Border Collaboration: Successfully worked with Hong Kong’s Anti-Deception Coordination Centre (ADCC)

- Financial Institution Partnership: HSBC’s prompt cooperation with authorities was crucial

- Rapid Response: The ASC successfully recovered the full amount within 3 days of the fraud

Singapore’s approach demonstrates the value of having specialized anti-scam units with established international partnerships and banking sector integration.

Deepfake Scam Challenges in Singapore

Singapore faces specific challenges with deepfake scams:

- Financial Hub Vulnerability: As a global financial center, Singapore businesses are high-value targets

- Technological Sophistication: Singapore’s tech-savvy business environment may paradoxically create overconfidence

- Multinational Environment: Companies with international operations face complexity in verifying communications

- Cultural Factors: Hierarchical business structures may make employees hesitant to question apparent leadership directives

Preventative Measures and Future Concerns

To address these challenges, Singapore authorities recommend:

- Establishing formal verification protocols for executive communications

- Training employees specifically on deepfake awareness

- Implementing multi-factor authentication for financial transfers

- Creating organizational cultures where questioning unusual requests is encouraged

The incident highlights the need for both technological and human-centered safeguards in an environment where AI technology continues to advance rapidly.

Notable Deepfake Scams Beyond the Singapore Case

Corporate Deepfake Incidents

Hong Kong $25 Million Heist (2023) The article mentions a similar case in Hong Kong, where a multinational corporation lost HK$200 million (approximately US$25 million) after an employee participated in what appeared to be a legitimate video conference. All participants except the victim were AI-generated deep fakes. This represents one of the largest successful deepfake financial frauds to date.

UK Energy Company Scam (2019) In one of the first major reported cases, criminals used AI voice technology to impersonate the CEO of a UK-based energy company. They convinced a senior financial officer to transfer €220,000 (approximately US$243,000) to a Hungarian supplier. The voice deepfake was convincing enough to replicate the CEO’s slight German accent and speech patterns.

Consumer-Targeted Deepfake Scams

Celebrity Investment Scams: Deepfake videos of celebrities like Elon Musk, Bill Gates, and various financial experts have been used to promote fraudulent cryptocurrency investment schemes. These videos typically show the celebrity “endorsing” a platform that promises unrealistic returns.

Political and Public Trust Manipulation While not always for direct financial gain, deep fakes of political figures making inflammatory statements have been deployed to manipulate public opinion or disrupt elections. These erode trust in legitimate information channels and can indirectly facilitate other scams.

Dating App Scams Scammers have used deepfake technology to create synthetic profile videos on dating apps, establishing trust before moving to romance scams. This represents a technological evolution of traditional romance scams.

Emerging Deepfake Threats

Real-time Video Call Impersonation Advancements now allow for real-time facial replacement during video calls, making verification protocols that rely on video confirmation increasingly vulnerable.

Voice Clone Scams Targeting Families Cases have emerged where scammers use AI to clone a family member’s voice, then call relatives claiming to be in an emergency situation requiring immediate financial assistance.

Deepfake Identity Theft Beyond financial scams, deepfakes are increasingly used for identity theft to access secure systems, bypass biometric security, or create fraudulent identification documents.

Global Response Trends

Different jurisdictions are responding to these threats with varying approaches:

- EU: Implementing broad AI regulations that include provisions for deepfake disclosure

- China: Instituting specific regulations against deepfakes requiring clear labeling

- United States: Developing industry standards and focusing on detection technology

- Southeast Asia: Establishing regional cooperation frameworks similar to the Singapore-Hong Kong collaboration mentioned in the article

The proliferation of these scams highlights the need for continued technological countermeasures in addition to traditional fraud awareness training and verification procedures.

A finance worker at a multinational company was tricked into transferring $25 million (about 200 million Hong Kong dollars) to fraudsters after participating in what they thought was a legitimate video conference call.

The scam involved:

- The worker initially received a suspicious message supposedly from the company’s UK-based CFO about a secret transaction

- Though initially skeptical, the workers’ doubts were overcome when they joined a video conference call.

- Everyone on the call appeared to be colleagues they recognized, but all participants were actually deepfake recreations.

- The scam was only discovered when the worker later checked with the company’s head offic.e

Hong Kong police reported making six arrests connected to such scams. They also noted that in other cases, stolen Hong Kong ID cards were used with AI deepfakes to trick facial recognition systems for fraudulent loan applications and bank account registrations.

This case highlights the growing sophistication of deepfake technology and the increasing concerns about its potential for fraud and other harmful uses.

Analyzing Deepfake Scams: The New Frontier of Digital Fraud

The Hong Kong Deepfake CFO Case: A Sophisticated Operation

The $25 million Hong Kong scam represents a significant evolution in financial fraud tactics. What makes this case particularly alarming is:

- Multi-layered deception – The scammers created not just one convincing deepfake but multiple synthetic identities of recognizable colleagues in a conference call setting

- Targeted approach – They specifically chose to impersonate the CFO, a high-authority figure with legitimate reasons to request financial transfers.

- Social engineering – They overcame the victim’s initial skepticism by creating a realistic social context (the conference call) that normalized the unusual request.

The Growing Threat Landscape of Deepfake Scams

Deepfake-enabled fraud is expanding in several concerning directions:

1. Financial Fraud Variations

- Executive impersonation – Like the Hong Kong case, targeting finance departments by mimicking executives

- Investment scams – Creating fake testimonials or celebrity endorsements for fraudulent schemes

- Banking verification – Bypassing facial recognition and voice authentication systems

2. Identity Theft Applications

- As seen in the Hong Kong ID card cases, deepfakes are being used to create synthetic identities for:

- Loan applications

- Bank account creation

- Bypassing KYC (Know Your Customer) protocols

3. Technical Evolution

- Reduced technical barriers – Creating convincing deepfakes once required significant technical expertise and computing resources, but user-friendly tools are making this technology accessible.

- Quality improvements – The technology is rapidly advancing in realism, making detection increasingly tricky.

- Real-time capabilities – Live video manipulation is becoming more feasible

Why Deepfake Scams Are Particularly Effective

Deepfake scams exploit fundamental human cognitive and social vulnerabilities:

- Trust in visual/audio evidence – Humans are naturally inclined to trust what they see and hear

- Authority deference – People tend to comply with requests from authority figures

- Social proof – The presence of multiple, seemingly legitimate colleagues creates a sense of normalcy

- Security fatigue – Even security-conscious individuals can become complacent when faced with seemingly strong evidence

Protection Strategies

For Organizations

- Multi-factor verification protocols – Implement out-of-band verification for large transfers (separate communication channels)

- Code word systems – Establish private verification phrases known only to relevant parties

- AI detection tools – Deploy technologies that can flag potential deepfakes

- Training programs – Educate employees about these threats with specific examples

For Individuals

- Healthy skepticism – Question unexpected requests, especially those involving finances or sensitive information

- Verification habits – Use different communication channels to confirm unusual requests.

- Context awareness – Be especially vigilant when urgency or secrecy is emphasised.

- Technical indicators – Look for inconsistencies in deepfakes (unnatural eye movements, lighting inconsistencies, audio-visual misalignment)

The Future of Deepfake Fraud

The deepfake threat is likely to evolve in concerning ways:

- Targeted personalization – Using information gleaned from social media to create more convincing personalized scams

- Hybrid attacks – Combining deepfakes with other attack vectors like compromised email accounts

- Scalability – Automating parts of the scam process to target more victims simultaneously

As this technology continues to advance, the line between authentic and synthetic media will become increasingly blurred, requiring both technological countermeasures and a fundamental shift in how we verify identity and truth in digital communications.

What Are Deepfake Scams?

Deepfake scams involve using artificial intelligence (AI) technology to create compelling fake voice recordings or videos that impersonate real people. The goal is typically to trick victims into transferring money or taking urgent action.

Key Technologies Used

- Voice cloning: Requires just 10-15 seconds of original audio

- Face-swapping: Uses photos from social media to create fake video identities

- AI-powered audio and video manipulation

How Scammers Operate

Emotional Manipulation

Scammers exploit human emotions like:

- Creating Urgency: The primary goal is to make victims act quickly without rational thought.

Real-World Examples

- In Inner Mongolia, a victim transferred 4.3 million yuan after a scammer used face-swapping technology to impersonate a friend during a video call.

- Growing concerns in Europe about audio deepfakes mimicking family members’ voices

How to Protect Yourself

Identifying Fake Content

- Watch for unnatural lighting changes

- Look for strange blinking patterns

- Check lip synchronization

- Be suspicious of unusual speech patterns

Safety Practices

- Never act immediately on urgent requests

- Verify through alternative communication channels

- Contact the supposed sender through known, trusted methods

- Remember: “Seeing is not believing” in the age of AI

Expert Insights

“When a victim sees a video of a friend or loved one, they tend to believe it is real and that they are in need of help.” – Associate Professor Terence Sim, National University of Singapore

Governmental Response

Authorities like Singapore’s Ministry of Home Affairs are:

- Monitoring the technological threat

- Collaborating with research institutes

- Working with technology companies to develop countermeasures

Conclusion

Deepfake technology represents a sophisticated and evolving threat to personal and financial security. Awareness, skepticism, and verification are key to protecting oneself.

What Are Deepfake Scams?

Deepfake scams involve using artificial intelligence (AI) technology to create compelling fake voice recordings or videos that impersonate real people. The goal is typically to trick victims into transferring money or taking urgent action.

Key Technologies Used

- Voice cloning: Requires just 10-15 seconds of original audio

- Face-swapping: Uses photos from social media to create fake video identities

- AI-powered audio and video manipulation

How Scammers Operate

- Emotional Manipulation Scammers exploit human emotions like:

- Fear

- Excitement

- Curiosity

- Guilt

- Sadness

- Creating Urgency: The primary goal is to make victims act quickly without rational thought.

Real-World Examples

- In Inner Mongolia, a victim transferred 4.3 million yuan after a scammer used face-swapping technology to impersonate a friend during a video call.

- Growing concerns in Europe about audio deepfakes mimicking family members’ voices

How to Protect Yourself

Identifying Fake Content

- Watch for unnatural lighting changes

- Look for strange blinking patterns

- Check lip synchronization

- Be suspicious of unusual speech patterns

Safety Practices

- Never act immediately on urgent requests

- Verify through alternative communication channels

- Contact the supposed sender through known, trusted methods

- Remember: “Seeing is not believing” in the age of AI

Expert Insights

“When a victim sees a video of a friend or loved one, they tend to believe it is real and that they are in need of help.” – Associate Professor Terence Sim, National University of Singapore

Governmental Response

Authorities like Singapore’s Ministry of Home Affairs are:

- Monitoring the technological threat

- Collaborating with research institutes

- Working with technology companies to develop countermeasures

Conclusion

Deepfake technology represents a sophisticated and evolving threat to personal and financial security. Awareness, skepticism, and verification are key to protecting oneself.

Maxthon

Maxthon has set out on an ambitious journey aimed at significantly bolstering the security of web applications, fueled by a resolute commitment to safeguarding users and their confidential data. At the heart of this initiative lies a collection of sophisticated encryption protocols, which act as a robust barrier for the information exchanged between individuals and various online services. Every interaction—be it the sharing of passwords or personal information—is protected within these encrypted channels, effectively preventing unauthorised access attempts from intruders.

Maxthon private browser for online privacyThis meticulous emphasis on encryption marks merely the initial phase of Maxthon’s extensive security framework. Acknowledging that cyber threats are constantly evolving, Maxthon adopts a forward-thinking approach to user protection. The browser is engineered to adapt to emerging challenges, incorporating regular updates that promptly address any vulnerabilities that may surface. Users are strongly encouraged to activate automatic updates as part of their cybersecurity regimen, ensuring they can seamlessly take advantage of the latest fixes without any hassle. Maxthon Browser Windows 11 support

In today’s rapidly changing digital environment, Maxthon’s unwavering commitment to ongoing security enhancement signifies not only its responsibility toward users but also its firm dedication to nurturing trust in online engagements. With each new update rolled out, users can navigate the web with peace of mind, assured that their information is continuously safeguarded against ever-emerging threats lurking in cyberspace.

\