In this piece, we’ll explore the concept of caching, its significance, and its fundamental applications. We will also delve into how it operates, the different types of caching available, and its real-world uses.

What exactly is caching?

At its core, caching refers to the technique of storing data in memory—specifically in cache memory—to facilitate quicker access. The primary objective of caching is to minimise the time it takes to retrieve specific pieces of information. By keeping frequently accessed data readily available for future use, caching addresses a critical issue: accessing data from slower persistent storage devices like hard drives (HDDs or SSDs) can be time-consuming and hinder performance. Caching effectively shortens this retrieval time by utilising a high-speed storage layer known as cache memory.

Cache memory acts as an intermediary that stores data temporarily and is implemented through fast-access hardware such as RAM. This mechanism allows for the reuse of previously computed results. When either hardware or software requests are specific, the system first checks the cache memory. If it locates the needed information there—a scenario known as a cache hit—the process is expedited; if not found—a cache miss occurs—the system must then seek out this data from slower storage options.

Why is caching so crucial?

It plays a pivotal role in enhancing system performance by significantly reducing overall processing times and increasing efficiency. In modern computing technology, caching has become indispensable; it often eliminates unnecessary requests for new data while preventing redundant processing tasks.

Caching Memory: A Journey to Speed Up Databases

In database applications, caching is a vital tool for enhancing speed and efficiency. Imagine a scenario where a segment of the database is swapped out for cache memory; this clever manoeuvre effectively eliminates latency that typically arises from frequent data retrieval. Such instances are particularly prevalent in high-traffic dynamic websites, where vast amounts of data are accessed regularly.

Another fascinating application of caching is accelerating query performance. Executing complex queries—especially those involving ordering and grouping—can be time-consuming. However, if these queries are executed repeatedly, storing their results in cache can significantly boost response times.

So, how does this caching mechanism operate? A designated portion of RAM is explicitly allocated for cache memory. Whenever software seeks data from storage, it first checks the cache to see if the desired information is already there. If it finds what it needs in the cache, the application retrieves it quickly from there. Conversely, if the required data isn’t available in the cache, the software must turn to its source—the hard drive—to fetch it. Once retrieved, this data is then stored in cache memory for future access.

Given that cache memory has limited capacity, older or less relevant data must be discarded to make room for new entries. This necessitates an algorithm designed to identify and eliminate less helpful information based on usage patterns. For example, employing an LRU (least recently used) algorithm allows for removing records that have seen minimal demand from applications. The algorithm essentially operates on the principle that if specific data hasn’t been accessed recently—or may not be needed again soon—it’s a candidate for removal.

However, one significant hurdle associated with caches is known as cache misses, which occur when an application requests data that isn’t found within the cache’s confines. Frequent cache misses can lead to inefficiencies since each miss forces the system to revert to checking storage databases (the hard drive), resulting in additional workload and diminished performance.

One effective strategy to combat this issue is simply increasing the size of the cache memory itself. Additionally, distributed caches come into play when dealing with larger datasets; these systems harness collective RAM resources across multiple interconnected computers to facilitate more efficient access.

Thus unfolds the intricate narrative of caching memory—a pivotal player in optimising database interactions and enhancing overall system performance.

Exploring Different Caching Techniques

Various methods are employed to enhance performance and efficiency when it comes to caching. Let’s delve into some of the critical types of caching.

Database Caching: Within databases, a foundational level of caching already exists. This internal mechanism helps prevent the same queries from being executed repeatedly. When a query is run, the database can quickly return results from its cache for previously executed queries. The most prevalent algorithm used for database caching involves storing data in a hash table as key-value pairs.

Memory Caching: In this approach, cached data is stored directly in RAM, which significantly speeds up access compared to traditional storage solutions like hard drives. Similar to database caching, this method utilises unique key-value pairs where each value represents cached information, and each key serves as its identifier. This technique is not only swift but also efficient and straightforward to implement.

Web Caching:

Web caching can be categorised into two main types:

1. Web Client Caching: Often referred to as web browser caching, this method operates on the client side and is widely used by internet users everywhere. It kicks in when a web page loads; the browser saves resources such as images, text, media files, and scripts locally. Suppose a user revisits the same page later on. In that case, these resources can be retrieved from the cache rather than being downloaded anew from the internet—a process that enhances speed significantly.

2. Web Server Caching: In contrast to client-side caching, web server caching occurs on the server itself with an aim to reuse resources effectively. This technique proves particularly beneficial for dynamic web pages while offering less advantage for static ones. By alleviating server overloads and streamlining operations, it boosts page delivery speeds.

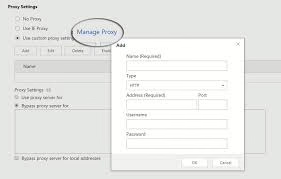

CDN Caching: Content Delivery Network (CDN) caching focuses on storing resources within proxy servers—these include scripts, stylesheets, media files, and entire web pages. Acting as intermediaries between users and origin servers, these proxy servers check if they possess copies of requested resources when users make inquiries. If available in the cache, they deliver it directly; if not found there, requests are forwarded to the origin server instead—this reduces network delays while minimising calls made to the original server.

Where Caching Finds Its Place

Caching isn’t just a theoretical concept; it has practical applications across various industries. In health and wellness sectors, an effective caching system enables organisations to provide rapid service while optimising costs and accommodating growth as demand increases.

In advertising technology, where every millisecond counts during real-time bidding processes, quick access to bidding information is crucial. Database caching shines by retrieving data in mere milliseconds or less, ensuring that bids are placed promptly before they lose relevance.

Innovative caching solutions also greatly benefit mobile applications. These systems allow apps to deliver high performance that meets user expectations while scaling efficiently and lowering overall expenses.

The gaming and media industries rely on caching, too. It ensures seamless gameplay by providing instant responses for frequently requested data, keeping players engaged without frustrating delays.

Finally, in e-commerce settings, effective cache management becomes a strategic advantage that can make all the difference between securing a sale or losing a potential customer due to slow response times.

In summary, while caching offers numerous benefits that enhance speed and efficiency across various fields, it also introduces complexities that must be carefully managed for optimal results.

Instructions for Managing Cache on Maxthon Browser

1. Launch Maxthon Browser: Start by opening the Maxthon browser on your device by clicking its icon.

2. Open Settings Menu: Find the menu icon located in the top-right corner of the browser window. Click it, then choose Settings from the list that appears.

3. Go to Privacy Section: Within the Settings menu, locate and select the Privacy tab, where you can manage your browsing data, including cache management.

4. Clear Cached Data: To remove cached files, look for an option labelled Clear Browsing Data. Click this option to open a new window with various data-clearing choices.

5. Choose Cache Option: In this new window, you’ll see several checkboxes. To clear cache data specifically, check the box next to Cached images and files.

6. Select Timeframe for Clearing: Decide how far back you want to clear your cache; options typically include last hour, last 24 hours, or all time.

7. Confirm Your Selections: After making your choices, click on the Clear Data button at the bottom of the window and wait briefly while Maxthon processes your action.

8. Modify Future Cache Preferences: If you wish, return to Privacy settings to find options for managing cache more effectively in the future—such as setting automatic cleanup intervals or adjusting storage limits.

9. Exit Settings Menu: Finally, close the settings menu and resume browsing as usual; your cache will now be managed according to your specifications!