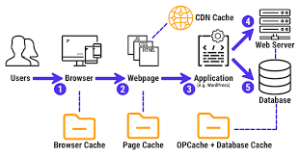

Let’s delve into the role of browsers and their functionality. Given that the majority of your users will access your web application via a browser, grasping the fundamental workings of these remarkable tools is essential. At its core, a browser acts as a rendering engine; its primary function is to fetch a webpage and display it in a format that humans can easily comprehend. While this description may be an oversimplification, it serves our purpose for now. When a user types an address into the browser’s address bar, the browser retrieves the document associated with that URL and presents it visually.

You may be accustomed to using well-known web browsers like Chrome, Maxthon, Firefox, Edge, or Safari. However, it’s essential to recognise that there are many other options available. Take Lynx, for instance—a minimalist and text-only browser that operates directly from your command line interface. Despite its simplicity, Lynx operates on the same fundamental principles as any mainstream browser. When a user inputs a web address (URL), the browser retrieves the corresponding document and displays it; the key distinction is that Lynx lacks a graphical rendering engine and instead presents content in a text format. This means that even popular sites like Google will appear quite differently.

While we generally have an understanding of what browsers do, let’s delve deeper into the specific functions these clever applications perform for us. So, what exactly does a browser accomplish? In essence, its primary responsibilities include:

1. DNS Resolution

2. HTTP Exchange

3. Rendering

And then it cycles through these steps repeatedly.

DNS Resolution is the initial phase where the browser determines which server to connect with after you type in a URL. It queries a DNS server to translate something like google.com into an IP address—such as 216.58.207.110—that it can reach out to.

Once this identification is complete, we move on to HTTP Exchange. Here, the browser establishes a TCP connection with the identified server and begins communicating via HTTP, a widely used protocol for online interactions. Essentially, this process involves our browser sending out requests and receiving responses from the server.

In summary, while you might favour familiar browsers for your online activities, exploring alternatives like Lynx can offer unique insights into how web navigation works behind the scenes!

Imagine this scenario: after the browser establishes a successful connection with the server located at google.com, it proceeds to send a request that appears as follows:

“`

GET HTTP/1.1

Host: google.com

Accept:

“`

Let’s dissect this request step by step. The initial line, `GET HTTP/1.1`, indicates that the browser is asking the server to fetch the document from a specific location while also noting that it will adhere to the HTTP version 1.1 protocol (though versions 1.0 or 2 could also be utilised).

Next comes `Host: google.com`, which is a crucial header in HTTP 1.1; it’s mandatory. This is important because servers may host multiple domains (like google.com and google.co.uk), so specifying the host ensures clarity about which site the request pertains to.

Then we have `Accept:`—an optional header where the browser informs the server that it is open to receiving any type of response. The server might have resources available in various formats, like JSON, XML, or HTML, allowing it to choose whichever format best suits its needs.

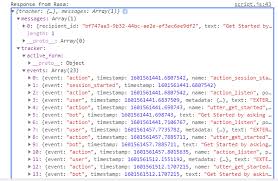

Once our browser—acting as a client—has completed its request, it’s time for the server to respond. Here’s what such a response might look like:

“`

HTTP/1.1 200 OK

Cache-Control: private, max-age=0

Content-Type: text/html; charset=ISO-8859-1

Server: gws

X-XSS-Protection: 1; mode=block

X-Frame-Options: SAMEORIGIN

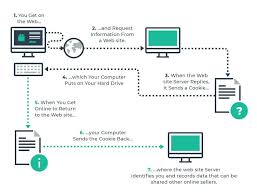

Set-Cookie: NID=1234; expires=Fri, 18-Jan-2019 18:25:04 GMT; path=/; domain=.google.com; HttpOnly

<!doctype html>

<html> …… </html>

“`

That’s quite a bit of information! The server communicates that our request was successful with a status code of 200 OK and includes several headers in its reply—such as identifying which server processed our request (`Server: gws`) and detailing its X-XSS-Protection policy.

At this point, you don’t need to grasp every single line of this response just yet—we’ll delve into HTTP protocols and headers later in this series. For now, it’s essential to recognise that there’s an ongoing exchange of information between client and server using HTTP.

Lastly comes rendering—the process that transforms raw data into something visually appealing for users. Imagine if all your browser displayed were random characters! Within the body of its response, the server provides content formatted according to what was specified in the Content-Type header—in this case, set as text/html—meaning we should anticipate HTML markup within that body content – and we do just that!

This is where a web browser really excels. It interprets HTML, retrieves any additional resources specified in the markup—such as JavaScript files or CSS stylesheets—and delivers them to the user as quickly as possible. The outcome is something that an everyday person can quickly grasp. For those curious about the intricate details of what happens when we press enter in a browser’s address bar, I recommend checking out What Happens When. It’s a comprehensive breakdown of the underlying mechanics involved. Given that this series emphasises security let me drop a hint based on what we’ve just explored: cybercriminals often exploit weaknesses in the HTTP exchange and rendering processes. While vulnerabilities and malicious actors exist in various other areas, enhancing security at these levels can significantly bolster your overall security stance.

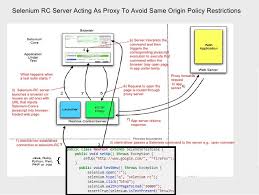

Now, let’s talk about browsers themselves. The four most widely used ones come from different developers: Chrome from Google, Firefox from Mozilla, Safari from Apple, and Edge from Microsoft. These companies not only compete fiercely for market share but also collaborate to enhance web standards—essentially the baseline requirements for browsers. The World Wide Web Consortium (W3C) oversees these standards’ development; however, it’s common for browsers to innovate features that eventually become part of these standards, especially regarding security measures.

Take Chrome 51 as an example. It introduced SameSite cookies, a feature designed to mitigate a specific vulnerability known as Cross-Site Request Forgery (CSRF). Other browser developers recognised its value and adopted it, resulting in SameSite becoming a recognised web standard. As it stands now, Safari remains the only major browser lacking support for SameSite cookies.

This situation reveals two crucial points: first, Safari appears less committed to prioritising user security (just kidding—SameSite cookie support will be available in Safari 12; by the time you read this article, it may have already launched). Second—and more crucially—fixing a vulnerability in one browser doesn’t guarantee that all users are safeguarded across different platforms.

When it comes to web security, your approach should be influenced by the capabilities that browser vendors provide. In today’s landscape, most browsers share a similar set of features and typically follow a unified development path. However, exceptions do arise, as illustrated in previous examples, and these must be factored into our security planning. For instance, if we choose to rely solely on SameSite cookies to defend against CSRF attacks, we need to recognise that this could jeopardise our users on Safari. It’s also crucial for our users to be aware of this potential risk.

Moreover, it’s essential to understand that you have the discretion to determine which browser versions you will support. Supporting every single version would be unfeasible—just think about the challenges posed by Internet Explorer 6! That said, ensuring compatibility with recent versions of major browsers is usually a sound strategy. If you decide not to protect a specific platform, it’s wise to communicate this clearly to your users.

Here’s a tip: never encourage your users to stick with outdated browsers or actively support them. Even if you’ve implemented robust safety measures on your end, other developers may not have done the same. It’s best practice to guide users toward using the latest supported versions of prominent browsers.

Now, let’s consider another layer of complexity: the average user accesses applications through third-party clients like browsers, which can introduce their security vulnerabilities. Browser vendors often offer rewards known as bug bounties for researchers who identify such vulnerabilities within their software. These issues are independent of how you implement your application; they stem from how each browser manages its security protocols.

Take Chrome’s reward program as an example: it allows security engineers to report any vulnerabilities they discover directly to the Chrome security team. Once verified, patches are released along with public advisories about the risks involved—and in return for their findings, researchers typically receive financial compensation from the program.

Companies like Google invest significantly in their Bug Bounty initiatives because they attract talented researchers eager for monetary rewards upon discovering flaws within applications. This creates a win-win situation: vendors enhance their software’s security while researchers are compensated for their valuable contributions.

We’ll delve deeper into these Bug Bounty programs later because I believe they warrant dedicated attention within the broader context of web security. For instance, Jake Archibald—a developer advocate at Google—recently uncovered a vulnerability affecting multiple browsers and documented his experiences engaging with various vendors in an insightful blog post that I highly recommend checking out.

A Developer’s Browser

By now, we should grasp a fundamental yet crucial idea: browsers function as HTTP clients designed primarily for typical Internet users. While they possess more capabilities than a primary HTTP client, such as the one found in NodeJS (for instance, via require(‘HTTP)), ultimately, they represent an advanced version of more straightforward HTTP clients. For developers like us, cURL—created by Daniel Stenberg—often emerges as our preferred HTTP client. It stands out as one of the most widely used tools among web developers on a daily basis. With cURL, we can perform HTTP exchanges directly from our command line interface. For example:

“`bash

$ curl -I localhost:8080

“`

This command sends a request to the document hosted at localhost:8080 and receives a successful response from the local server:

“`

HTTP/1.1 200 OK

Server: ecstatic-2.2.1

Content-Type: text/html

ETag: 23724049-4096-2018-07-20T11:20:35.526Z

Last-Modified: Fri, 20 Jul 2018 11:20:35 GMT

Cache-Control: max-age=3600

Date: Fri, 20 Jul 2018 11:21:02 GMT

Connection: keep-alive

“`

In this instance, we’ve opted for the `-I’ll flag to focus solely on the response headers rather than displaying the body of the response in our terminal. To delve deeper into this interaction and observe additional details about our request, we can utilise the `-v` (verbose) option:

“`bash

$ curl -I -v localhost:8080

“`

This command provides more context about what’s happening behind the scenes:

“`

Rebuilt URL to localhost:8080

Trying 127.0.0.1…

Connected to localhost (127.0.0.1) port 8080

HEAD / HTTP/1.1

Host: localhost

User-Agent: curl/7.47.0

Accept:

HTTP/1.1 200 OK

Server : ecstatic-2 .2 .1

Content-Type: text/html

ETag : 23724049-4096-2018-07-20T11 :20 :35 .526Z

Last-modified: Fri, 20 Jul 2018,11:20:35 GMT

Cache-control : max-age =3600

Date: Fri,20 Jul 2018,11:21:02 GMT

Connection: keep-alive

“`

Here we see not only what was sent but also how it all fits together in this complete HTTP transaction—a valuable insight for any developer keen on understanding web communications more thoroughly.

In the scenario described earlier, we requested a document at localhost:8080, and our local server responded positively. Instead of displaying the entire body of the response in the command line, we opted to use the -I flag with cURL, indicating that our focus is solely on the response headers. To delve deeper into this HTTP interaction, we can further enhance our cURL command to provide additional details about the request being sent. The option required for this is -v (verbose).

When we run:

“`bash

$ curl -I -v localhost:8080

“`

We see output like this:

“`

Rebuilt URL to localhost:8080

Trying 127.0.0.1…

Connected to localhost (127.0.0.1) port 8080 (0)

HEAD HTTP/1.1

Host: localhost:8080

User-Agent: curl 7.47.0

Accept:

HTTP/1.1 200 OK

Server: ecstatic-2.2.1

Content-Type: text/html

ETag: 23724049-4096-2018-07-20T11:20:35.526Z

Last-Modified: Fri, 20 Jul 2018 11:20:35 GMT

Cache-Control: max-age=3600

Date: Fri, 20 Jul 2018 11:25:55 GMT

Connection: keep-alive

Connection 0 to host localhost left intact.

“`

This output reveals nearly identical information that can also be accessed through DevTools in popular web browsers. Browsers function as sophisticated HTTP clients; while they offer a plethora of features like credential management and bookmarking, their primary purpose remains as tools for humans interacting with HTTP.

This distinction is crucial because when assessing your web application’s security, you often don’t need a full-fledged browser; using cURL can suffice for inspecting responses directly.

Lastly, it’s worth mentioning that virtually anything can serve as a browser in this context—take a mobile app that interacts with APIs via HTTP protocol; it acts as your browser, too—albeit one that you’ve tailored specifically for your needs.

How Maxthon Browser Works

1. Download and Installation

Begin by downloading the Maxthon browser from its official website. The installation process is straightforward; follow the on-screen prompts to complete it on your device.

2. User Interface Overview

After installation, launch Maxthon to explore its user-friendly interface. You’ll find a clean layout with a customisable homepage, tabs for multiple web pages, and an easy-to-navigate toolbar.

3. Tabs Management

Use tabs to manage multiple web pages simultaneously. To add a new tab, simply click the ‘+’ button next to the open tab. Organise your tabs into groups for better efficiency during browsing sessions.

4. Privacy Features

Maxthon offers built-in privacy features such as incognito mode, which prevents tracking while you browse. Activate this mode in the settings menu whenever you want to surf privately.

5. Cloud Syncing

One of Maxthon’s standout features is cloud syncing, allowing you to access bookmarks, preferences, and open tabs across devices seamlessly. Create an account to enable this feature.

6. Ad Blocker Integration

Enhance your browsing experience by using the integrated ad blocker that automatically removes intrusive advertisements from web pages, speeding up load times and improving page aesthetics.

7. Extensions and Customization

Explore the extensions marketplace for additional functionalities like password managers and enhanced security tools. You can also customise your browser’s appearance through themes available in settings.

8. Speed Dials for Quick Access

Set up speed dials on your homepage by choosing your favourite websites. This allows you to quickly access them each time you open the browser, which is ideal for regularly visited sites.

9. Support and Community Resources

If you encounter issues or have questions about features, visit Maxthon’s support forum or help centre. Community members share tips and troubleshooting advice.

By following these instructions, you’ll effectively utilise all of Maxthon’s capabilities to enhance your online experience!